Hello there! Today, I am going to take you on a fascinating journey into the world of Artificial Intelligence (AI) and explore the inner workings of this incredible technology. In this article, we will unravel the mysteries behind how AI functions, shedding light on its processes and the ways it has revolutionized various aspects of our lives. Get ready to be amazed by the amazing capabilities of AI as we dive into the realm of its inner workings.

Understanding AI

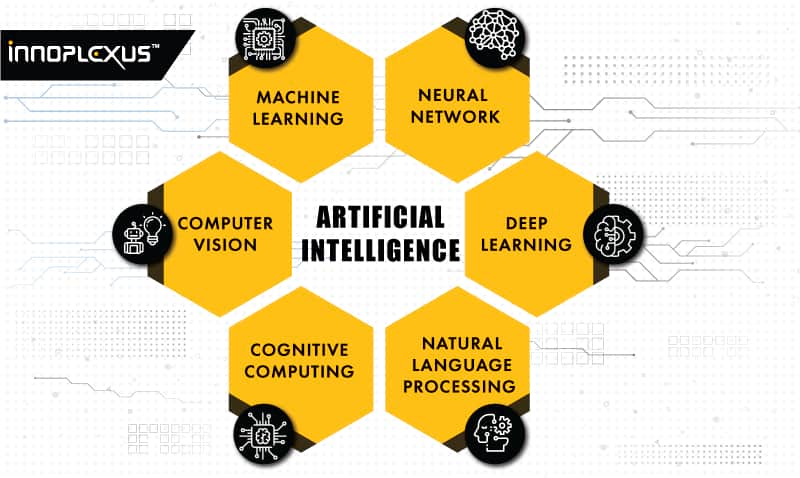

Artificial Intelligence (AI) is a fascinating field that has revolutionized numerous aspects of our lives. From the way we interact with technology to the development of cutting-edge solutions in various industries, AI has become an integral part of our world. In order to truly appreciate and utilize AI to its fullest potential, it is important to have a comprehensive understanding of its various components and capabilities. In this article, we will delve into the definition and origins of AI, explore the different types of AI, and discuss its applications in different domains.

Defining AI

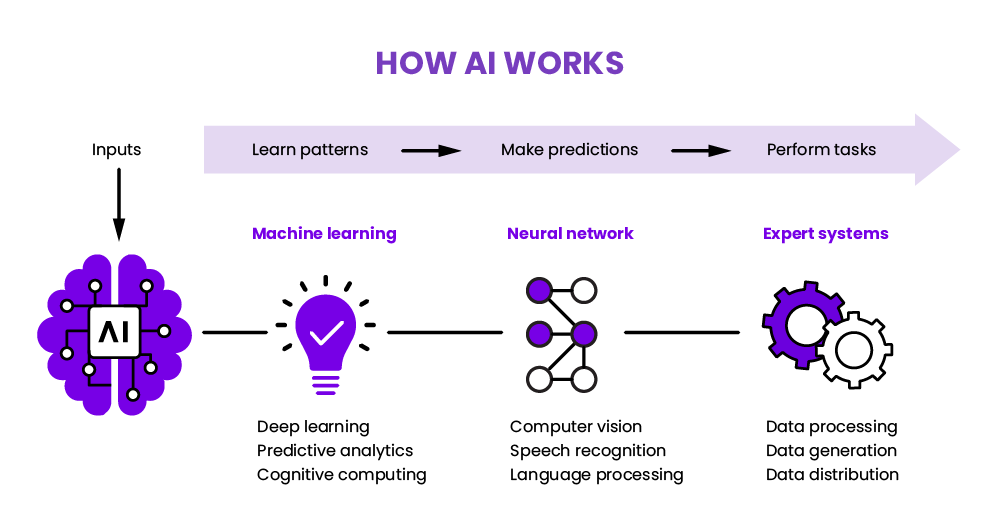

AI refers to the ability of machines to perform tasks that would normally require human intelligence. This includes processes such as learning, reasoning, problem-solving, and decision-making. AI systems are designed to analyze vast amounts of data, recognize patterns, and make predictions or take actions based on this analysis. The goal of AI is to mimic human cognitive abilities and provide intelligent solutions to complex problems.

The Origins of AI

The concept of AI can be traced back to ancient times, where tales of intelligent robots and automata captivated the imagination of people. However, the modern field of AI emerged in the mid-20th century, with the pioneering work of mathematician and computer scientist Alan Turing. Turing proposed the idea of a universal machine capable of simulating any other machine, which laid the foundation for the development of AI.

Over the years, the field of AI has evolved significantly, drawing inspiration from a range of disciplines such as mathematics, psychology, linguistics, and neuroscience. Advancements in hardware technology and the availability of large datasets have further accelerated the progress in AI research, leading to the development of sophisticated AI algorithms and models.

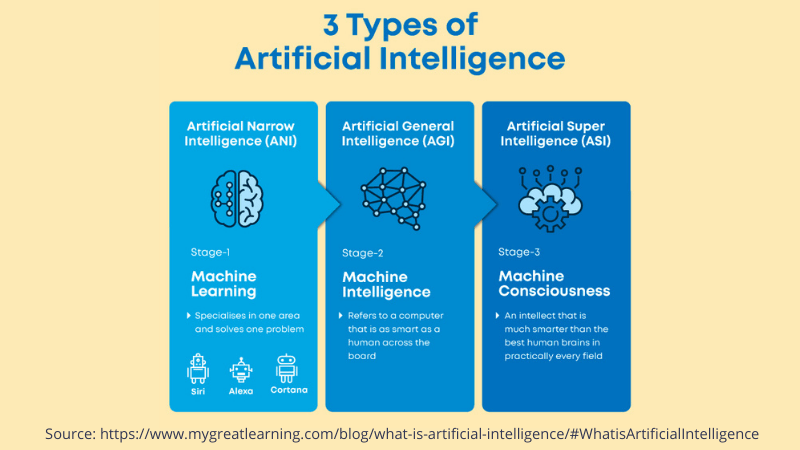

The Different Types of AI

AI can be broadly classified into three main types: narrow AI, general AI, and superintelligent AI.

- Narrow AI, also known as weak AI, refers to systems that are designed to perform specific tasks and operate within a limited domain. These AI systems are trained to excel in a particular area, such as image recognition, language translation, or speech recognition. Narrow AI is the most common form of AI that we encounter in our daily lives, powering various applications and services.

- General AI, also known as strong AI or human-level AI, refers to systems that possess the ability to understand, learn, and apply knowledge across different domains. Unlike narrow AI, which is focused on specific tasks, general AI aims to replicate human intelligence and adapt to various situations. However, achieving true general AI remains a significant challenge for researchers.

- Superintelligent AI, often depicted in science fiction, refers to AI systems that surpass human intelligence in every aspect. This hypothetical form of AI would have intellectual capabilities that far exceed those of humans and potentially pose existential risks if not properly controlled. Superintelligent AI is still in the realm of speculation, and its development and implications are subjects of intense debate among experts.

Machine Learning

What is Machine Learning?

Machine Learning (ML) is a subfield of AI that focuses on developing algorithms and models that enable computers to learn from data and make predictions or take actions without being explicitly programmed. ML algorithms learn patterns and relationships in the data through a process of iterative training and adjustment, thereby improving their performance over time.

ML leverages statistical techniques and computational power to extract meaningful insights from data, which can be used to solve complex problems or make informed decisions. The key aspect of ML is its ability to automatically learn from data and adapt to new situations without explicit instructions.

Supervised Learning

Supervised Learning is one of the fundamental approaches in ML, where the algorithm learns from labeled examples or observations. In supervised learning, a model is trained on a dataset that contains input data (features) and corresponding output labels. The goal is to learn a mapping function that can accurately predict the output for new, unseen inputs.

During the training process, the algorithm learns the relationships between the input features and the output labels, thereby enabling it to make predictions on new, unseen data. Supervised learning is widely used in various applications, such as image classification, spam filtering, and language translation.

Unsupervised Learning

Unsupervised Learning, as the name suggests, does not rely on labeled data for training. Instead, the algorithm is provided with a dataset containing only input data, and it is tasked with discovering patterns, structures, or relationships in the data.

In unsupervised learning, the model identifies clusters, similarities, or anomalies in the data without any prior knowledge of the correct output. This approach is particularly useful for tasks such as customer segmentation, anomaly detection, and data visualization.

Reinforcement Learning

Reinforcement Learning (RL) is a type of machine learning where an agent learns to interact with an environment and maximize a reward signal. In RL, the agent takes actions based on its current state, and it receives feedback in the form of rewards or penalties. The goal of the agent is to learn a policy that maximizes the cumulative reward over time.

Reinforcement learning is commonly used in applications that involve decision-making, such as robotics, game playing, and autonomous driving. It enables agents to learn optimal strategies by exploring the environment, taking actions, and receiving feedback based on the consequences of their actions.

Neural Networks

What are Neural Networks?

Neural Networks are a class of machine learning models inspired by the structure and function of the human brain. These models are composed of interconnected artificial neurons, also known as nodes or units, that process and transmit information.

The architecture of neural networks consists of layers of interconnected neurons, known as hidden layers, with an input layer and an output layer. The input layer receives the input data, and the output layer produces the desired output or prediction. The hidden layers perform intermediate computations and transformations, allowing neural networks to learn complex patterns and relationships in the data.

Artificial Neurons

Artificial neurons, or perceptrons, are the building blocks of neural networks. They receive input signals, apply a mathematical operation to these inputs, and produce an output signal. The inputs are multiplied by corresponding weights, which determine the relative importance of each input in the computation. The weighted inputs are then summed, and a non-linear activation function is applied to the sum to introduce non-linearity and enable the network to learn complex relationships.

The activation function determines the output of the artificial neuron based on its inputs. Common activation functions include the sigmoid function, which maps the inputs to a range between 0 and 1, and the rectified linear unit (ReLU) function, which outputs the input directly if it is positive and 0 otherwise. The choice of activation function depends on the specific problem and the desired behavior of the network.

Layers and Architectures

Neural networks consist of multiple layers of artificial neurons, which are arranged in a specific architecture. The arrangement of the neurons and the connections between them determine the learning capacity and the type of problems that the network can solve.

The layers in a neural network can be broadly classified into input layers, hidden layers, and output layers. The input layer receives the raw input data, while the output layer produces the final prediction or output. The hidden layers perform intermediate computations and contain the majority of the artificial neurons in the network.

The number of layers and the number of neurons in each layer, known as the network’s architecture, can vary depending on the problem and the complexity of the data. Deeper networks with more layers can capture more complex relationships and are suitable for tasks that require high-level abstractions, while shallow networks with fewer layers can handle simpler problems efficiently.

Training Neural Networks

The process of training neural networks involves adjusting the weights and biases of the artificial neurons to minimize the difference between the predicted output and the actual output. This is achieved through an iterative optimization process known as backpropagation, which involves propagating the error signal from the output layer back through the network and adjusting the weights and biases accordingly.

During training, the network is presented with a set of input data along with the corresponding ground truth labels. The predicted output is compared with the true output, and the error is calculated using a loss function. The loss function quantifies the discrepancy between the predicted output and the true output, providing a measure of how well the network is performing.

By iteratively adjusting the weights and biases based on the calculated error, the network gradually learns to make better predictions and reduce the loss. This process continues until the network reaches a state where the loss is sufficiently minimized, indicating that the network has learned the underlying patterns and relationships in the data.

Natural Language Processing

Introduction to NLP

Natural Language Processing (NLP) is a branch of AI that focuses on the interaction between computers and human language. It involves the development of algorithms and models that enable computers to understand, interpret, and generate human language in a way that is meaningful and useful.

NLP encompasses a wide range of tasks, including text classification, sentiment analysis, machine translation, and question answering. It enables applications such as virtual assistants, chatbots, and voice-controlled systems to interact with users in a natural and human-like manner.

Tokenization and Text Preprocessing

Tokenization is the process of breaking down a text into smaller units called tokens. These tokens can be individual words, phrases, or even characters, depending on the specific task and the level of granularity required. Tokenization is an essential step in NLP as it forms the basis for subsequent analysis and processing.

Text preprocessing involves cleaning and transforming the text data to make it suitable for further analysis. This typically involves removing punctuation, converting text to lowercase, removing stop words (common words that do not carry much meaning), and applying techniques such as stemming or lemmatization to reduce words to their base form.

Language Modeling

Language modeling is a task in NLP that involves predicting the next word in a sequence of words based on the context provided by the preceding words. Language models are trained on large amounts of text data and learn the probabilities of word sequences, allowing them to generate coherent and contextually relevant text.

Language models have numerous applications, such as text generation, auto-completion, and machine translation. They enable systems to generate human-like text and understand the syntactic and semantic relationships between words.

Named Entity Recognition

Named Entity Recognition (NER) is a task in NLP that involves identifying and classifying named entities in text. Named entities are specific entities that are mentioned in the text, such as people, organizations, locations, dates, or numerical expressions.

NER involves training a machine learning model on annotated data, where each named entity is labeled with its corresponding category. The model learns to recognize the patterns and features that distinguish named entities from the rest of the text. NER is a crucial component in various applications such as information extraction, question answering, and sentiment analysis.

Computer Vision

Overview of Computer Vision

Computer Vision is a field of AI that focuses on enabling computers to interpret and understand visual information from digital images or videos. It involves the development of algorithms and models that can analyze and extract meaningful insights from visual data, replicating the capabilities of human vision.

Computer vision has made significant advancements in recent years, with applications ranging from image classification and object detection to image segmentation and facial recognition. It enables machines to perceive and interpret the visual world, opening up possibilities in fields such as autonomous vehicles, surveillance, and medical imaging.

Image Classification

Image classification is a fundamental task in computer vision, where the goal is to assign labels or categories to images based on their visual content. It involves training a model on a dataset of labeled images, where each image is associated with a specific class or category.

During training, the model learns the visual features and patterns that are indicative of each category. It then uses this learned knowledge to predict the labels of new, unseen images. Deep learning techniques, such as convolutional neural networks (CNNs), have revolutionized image classification, achieving state-of-the-art performance on various benchmark datasets.

Object Detection

Object detection is a more advanced task in computer vision that involves identifying and localizing multiple objects within an image. It not only classifies the objects but also provides information about their precise location and extent.

Object detection algorithms use a combination of image classification and spatial localization techniques to achieve this task. They typically consist of a two-stage process: identifying potential objects or regions of interest in the image and then classifying these regions into specific object categories. Object detection has numerous applications, including autonomous driving, surveillance, and augmented reality.

Image Segmentation

Image segmentation is a more fine-grained task in computer vision that involves partitioning an image into meaningful regions or segments. Each segment corresponds to a specific object or region of interest, allowing for detailed analysis and understanding of the image content.

Segmentation algorithms use various techniques, such as clustering, edge detection, and region growing, to separate objects or regions based on their visual characteristics. Image segmentation has applications in medical imaging, scene understanding, video processing, and more.

Speech and Audio Processing

Speech Recognition

Speech recognition, also known as automatic speech recognition (ASR), is the process of converting spoken language into written text. It involves training models on large amounts of speech data to learn the relationship between speech signals and the corresponding textual representations.

Speech recognition systems use techniques such as signal processing, pattern recognition, and machine learning to analyze and transcribe spoken words or phrases. They have applications in voice assistants, transcription services, and dictation software, enabling users to interact with computers through spoken language.

Text-to-Speech Systems

Text-to-Speech (TTS) systems, as the name implies, convert written text into synthesized speech. These systems use natural language processing techniques to interpret the text and generate human-like speech with appropriate intonation, rhythm, and pronunciation.

TTS systems employ a combination of linguistic rules and machine learning algorithms to generate speech that is indistinguishable from natural human speech. They find applications in various domains, including voice assistants, audiobooks, and accessibility tools for the visually impaired.

Audio Signal Processing

Audio signal processing involves the analysis, manipulation, and synthesis of audio signals. It encompasses a range of techniques for extracting information from audio, enhancing its quality, and performing various audio-related tasks.

Audio signal processing techniques include noise reduction, audio enhancement, source separation, audio compression, and more. These techniques are instrumental in applications such as audio editing, speech enhancement, audio recognition, and music analysis.

Music Generation

Music generation refers to the process of creating new music using AI algorithms and models. It involves training a model on a large corpus of music data and using it to compose original melodies, harmonies, rhythms, and even lyrics.

Music generation models use various techniques, including recurrent neural networks (RNNs) and generative adversarial networks (GANs), to learn the patterns and structures in music and generate new compositions. This field has gained significant attention in recent years, with applications in music production, video game soundtracks, and personalized playlists.

AI in Action

AI in Healthcare

AI has the potential to revolutionize healthcare by enhancing diagnostic accuracy, improving patient outcomes, and enabling personalized treatment plans. AI algorithms can analyze medical images, such as X-rays and MRI scans, to detect diseases or abnormalities with high accuracy. They can also mine large datasets of patient records to identify patterns and risk factors for specific conditions.

AI-powered chatbots and virtual assistants are being developed to provide basic medical advice and triage patients, ensuring timely access to healthcare services. Additionally, wearable devices equipped with AI capabilities can monitor vital signs, detect early signs of health issues, and provide real-time feedback to patients and healthcare professionals.

AI in Finance

AI has made significant inroads in the financial sector, enabling efficient and intelligent decision-making in areas such as fraud detection, risk assessment, and algorithmic trading. AI algorithms can analyze vast amounts of financial data to identify patterns and anomalies that may indicate fraudulent activity.

In risk assessment, AI models can analyze credit profiles, market data, and other relevant information to assess the creditworthiness of borrowers or predict market trends. Algorithmic trading systems use AI-powered algorithms to make automated trades based on market conditions, historical data, and financial indicators.

AI in Transportation

AI is playing a pivotal role in transforming transportation, with advancements in autonomous vehicles, traffic management, and predictive maintenance. Autonomous vehicles leverage AI algorithms and sensors to perceive their surroundings, make decisions, and navigate safely without human intervention.

In traffic management, AI models can analyze real-time data from traffic cameras, GPS devices, and social media to optimize traffic flow, predict congestion, and provide dynamic routing recommendations. AI also enables predictive maintenance of transportation infrastructure and vehicles by analyzing sensor data and identifying potential failures before they occur.

AI in Entertainment

AI has revolutionized the entertainment industry, enhancing user experiences and enabling personalized recommendations. Streaming platforms use AI algorithms to analyze user preferences, viewing patterns, and content metadata to generate personalized recommendations that keep users engaged.

AI-powered virtual reality (VR) and augmented reality (AR) experiences offer immersive and interactive entertainment options. Chatbots and conversational agents are being deployed in the gaming industry to provide realistic and engaging interactions with characters and NPCs (non-player characters).

Ethics and Limitations

AI Ethics and Bias

As AI becomes increasingly pervasive, ethical considerations and the mitigation of bias become crucial. AI algorithms are only as good as the data they are trained on, and if the training data is biased or reflects societal prejudices, the AI system can perpetuate or amplify these biases.

It is imperative to ensure that AI systems are developed and deployed with fairness, transparency, and accountability in mind. This involves addressing bias in data collection and labeling, benchmarking and evaluating AI systems for fairness, and involving diverse perspectives in the development and decision-making processes.

Job Displacement

The rapid advancement of AI has raised concerns about job displacement and the impact on the workforce. While AI has the potential to automate repetitive and mundane tasks, it also creates new opportunities and shifts the nature of work.

It is essential that efforts be made to reskill and upskill the workforce to adapt to the changing job landscape. Collaboration between AI systems and human workers, known as human-AI collaboration, can lead to more productive and efficient work environments, where AI augments human capabilities and enables workers to focus on higher-value tasks.

Privacy and Security Concerns

AI systems often rely on vast amounts of personal and sensitive data to function effectively. This raises concerns about privacy, data protection, and potential misuse of personal information.

Data privacy regulations, such as the General Data Protection Regulation (GDPR), aim to safeguard individuals’ rights and ensure responsible handling of personal data. Organizations must implement robust data protection measures and adopt privacy-preserving techniques, such as differential privacy, to ensure the security and confidentiality of user data.

The Black Box Problem

One of the challenges associated with AI is the black box problem, where the inner workings of AI models are not easily interpretable or explainable. Deep learning models, such as neural networks, can exhibit complex behavior that is difficult to understand or scrutinize.

Interpretability and explainability are important for ensuring the trustworthiness, fairness, and accountability of AI systems. Researchers are actively exploring techniques to make AI models more interpretable, such as developing post-hoc explanation methods and designing inherently interpretable models.

Future of AI

Advancements and Innovations

The future of AI holds immense possibilities for advancements and innovations across various domains. New algorithms, models, and architectures will continue to emerge, enabling more accurate predictions, better decision-making, and improved performance in AI systems.

Advancements in hardware technologies, such as quantum computing and neuromorphic computing, will unlock new capabilities and accelerate AI research. AI will also be integrated with other emerging technologies, such as 5G networks, blockchain, and the Internet of Things (IoT), enabling AI to harness the power of interconnected devices and real-time data.

Integrating AI into Everyday Life

AI technologies will become more seamlessly integrated into our everyday lives, making our interactions with technology more intuitive and personalized. Virtual assistants will further evolve to understand natural language and context, allowing for more sophisticated and meaningful conversations.

Smart homes will leverage AI to automate routine tasks, provide personalized recommendations, and enhance energy efficiency. AI-powered healthcare devices, such as wearable monitors and diagnostic tools, will enable individuals to take more control over their health and well-being.

Potential Risks and Rewards

While AI presents numerous benefits and opportunities, it is not without risks and challenges. Ensuring data privacy, minimizing bias, and addressing ethical concerns are critical to building trustworthy and responsible AI systems.

There is also the concern of job displacement, as AI systems automate tasks that were previously performed by humans. However, this also opens up new opportunities for job creation and the development of skills that are uniquely human, such as creativity, critical thinking, and emotional intelligence.

The Singularity

The concept of the singularity is a hypothetical scenario where AI systems surpass human intelligence in every aspect. It envisions a future where AI systems are capable of recursive self-improvement, leading to an exponential increase in intelligence and the emergence of superintelligence.

The singularity is a subject of intense debate among experts, with differing opinions about its feasibility and implications. Some view it as an existential risk, where superintelligent AI could pose significant challenges to human civilization, while others see it as a potential catalyst for unprecedented progress and advancements.

Conclusion:

AI has undoubtedly transformed our lives and will continue to shape the future in ways we cannot yet fully grasp. Understanding the different components and capabilities of AI, from machine learning to computer vision and natural language processing, allows us to appreciate its potential and harness it for the benefit of humanity.

As AI continues to advance, it is crucial that we consider the ethical implications, address the limitations, and ensure responsible development and deployment. By doing so, we can leverage the power of AI to tackle complex problems, drive innovation, and create a future that harmonizes the capabilities of man and machine.

Related articles: